6 minutes

Socdocs: Tackling Usecase Versioning & Documentation in QRadar

SOC Documentation issues

Consistent and easy to use documentation of rules and building blocks in QRadar has been a thorn in my side for quite some time. QRadar allows for some rule notes, but doesn’t provide any versioning or audit logging of who changed what. If you combine that with a SOC that requires a lot of custom rule development, keeping your documentation in order becomes a nightmare.

Stuff I have seen tried to document these rules:

-

Excel on Sharepoint: Changes to the excel file would be versioned, so changing the rule syntax in the excel worked, but it was hell to maintain.

-

Confluence: By creating pages per rule and copy-pasting the rule syntax, provides you with search features, so that’s a bonus. Still too much manual labor though.

-

Rule notes: keeping a changelog in the rule notes works, but the lack of a quick full-text search through the rule catalog to see if something already exists in a variant or the other makes it not that effective. Also missing any kind of versioning, unless you consider custom content backups as versioning.

All three have some things that I would like to see in my ultimate documentation tool: versioning, audit logging, quick search. But they are all missing one key ingredient: Automation

We did try to work around the limitations through the use of the rule explorer and use case manager apps, but these weren’t working exactly as we wanted them to. So, time to pull up those sleeves and get working on something that would work for not just us, but everyone else that has the same issue.

First, let’s define a few steps how we’re going to tackle this problem:

- Get the rules: we need some way to get the ruleset out of QRadar.

- Create documentation pages: Based on the ruleset, I want a set of markdown pages that define the rules with the following data:

- Rule Description

- Rule Origin

- Rule Syntax

- A changelog that is easy to create.

- Host the latest set of these pages and make them searchable

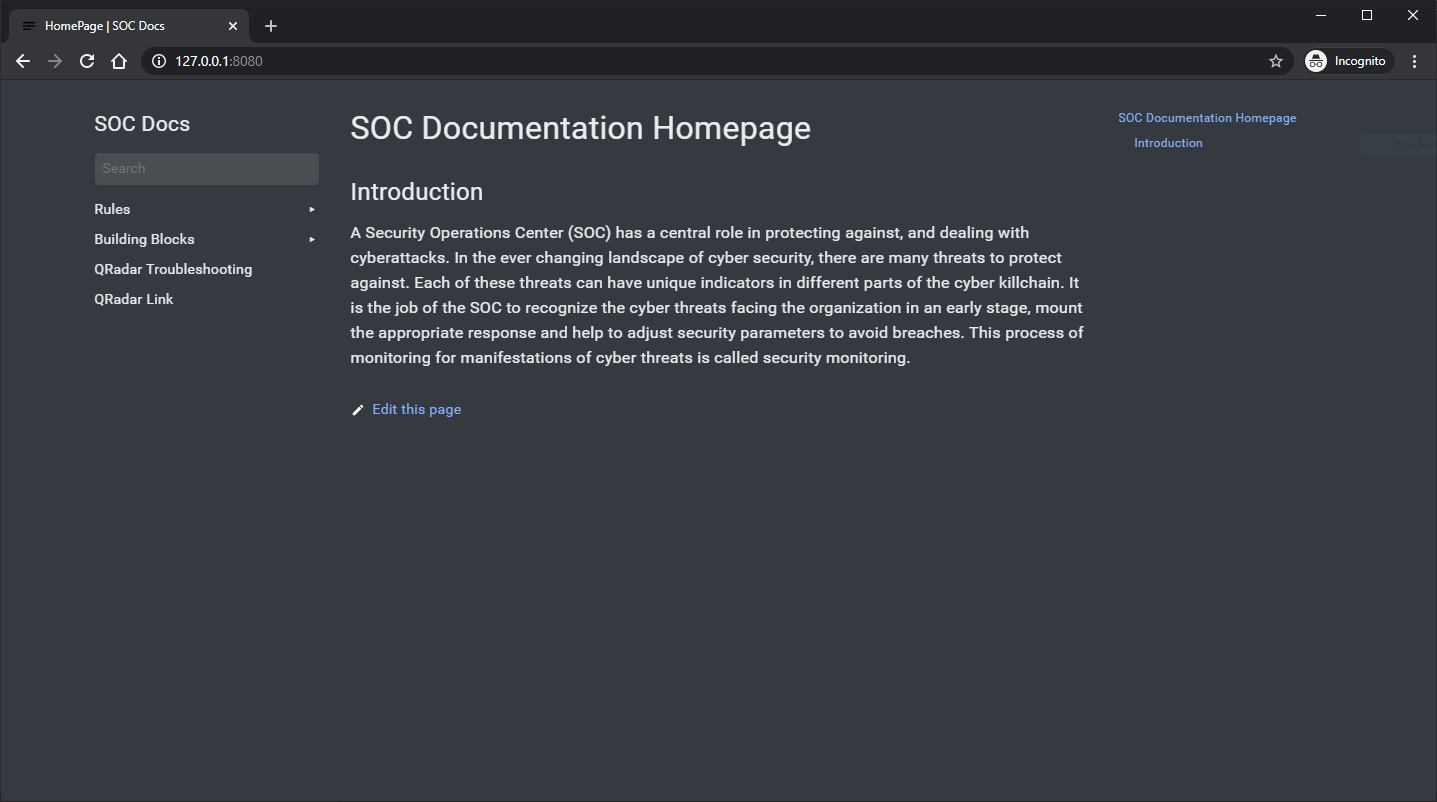

TLDR: I made a toolkit that does that https://github.com/dpb-infosec/socdocs. Run the following command and browse to 127.0.0.1:8080 to see it in action:

docker run -it -p 8080:80 dpbinfosec/socdocs

Step 1: Get the Rules

The first part of our journey is trying to get a complete dump of the ruleset in its current state. The QRadar RESt Api documentation quickly shows me there’s no way I’m going to get the rule syntax through some simple HTTP calls. No problem! The Rule explorer app ingests data in the format of an XML that you can create via the contentmanagement.pl script.

I use the following commandline to generate the bare minimum xml for what I need:

/opt/qradar/bin/contentManagement.pl \

--action export \

--content-type 3 --id all

The XML looks like this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<content>

<qradarversion>2019.18.4.20200629201233</qradarversion>

<custom_rule>

<base_capacity>3432329</base_capacity>

<origin>OVERRIDE</origin>

<mod_date>2020-05-08T10:41:20.973+02:00</mod_date>

<rule_data>PHJ1bGUgb3ZlcnJpZG...cnVsZT4=</rule_data>

<uuid>SYSTEM-1376</uuid>

<capacity_timestamp>1599170974000</capacity_timestamp>

<rule_type>5</rule_type>

<average_capacity>3432329</average_capacity>

<base_host_id>53</base_host_id>

<id>1376</id>

<create_date>2006-05-05T07:17:35.821+02:00</create_date>

</custom_rule>

The custom_rule XML is a bit exotic, more about that in the next part :) Nevertheless, this seems the easiest way to pull the information, so I decided to work based on that XML, as I want to keep the processing on the QRadar appliances themselves to a minimum. I have also created a small cronjob that generates the XML and pushes it towards a git repository, this will play to our advantage as we can use those events as triggers for a CI/CD pipeline.

Step 2: Create documentation pages

Working from the XML above, it seems we have a two-layered system:

- Some top-level metadata about the rule.

- The actual rule data, being a base64 encoded version of an XML.

Since this is going to require some XML parsing I opted for Python3, as I worked with the XML libs before.

A quick summary of the script:

- Loop every rule in the XML

- Create an empty rule object

- Take the metadata I need from the top level and persist it in the rule object

- Decode the rule data XML

- Loop the elements in the rule data to generate a rule syntax like the one in QRadar

- Persist the rule syntax in the rule object

- Render a markdown file with the completed rule object.

In the end I added a few additional tweaks, like create/cleaning the folder structure.

This is what I ended up with (subject to improvement): https://github.com/dpb-infosec/socdocs/blob/master/exportparser.py

Now that we have the markdown pages, we can save these to a git folder. This way, we version all rules through git, and it even provides us with line-per-line changes that were added/removed between two versions of a rule!

Markdown pages are great, and render well in most git repos with some kind of front-end, but we still have to do things manually at this point. If anyone forgets to generate a new set of files and update the git repo we would be stuck with out-of-sync documentation. How about we automate it so that upon updating the XML file the python script runs, generates the files, and hosts them somewhere?

Automation through CICD pipelines

Let’s take a quick look at the Dockerfile:

FROM alpine:latest AS hugo

ENV HUGO_VERSION 0.74.3

ENV GLIBC_VERSION 2.32-r0

RUN apk add --no-cache \

bash \

curl \

git \

libstdc++ \

sudo

RUN wget -q -O /etc/apk/keys/sgerrand.rsa.pub https://alpine-pkgs.sgerrand.com/sgerrand.rsa.pub \

&& wget "https://github.com/sgerrand/alpine-pkg-glibc/releases/download/${GLIBC_VERSION}/glibc-${GLIBC_VERSION}.apk" \

&& apk --no-cache add "glibc-${GLIBC_VERSION}.apk" \

&& rm "glibc-${GLIBC_VERSION}.apk" \

&& wget "https://github.com/sgerrand/alpine-pkg-glibc/releases/download/${GLIBC_VERSION}/glibc-bin-${GLIBC_VERSION}.apk" \

&& apk --no-cache add "glibc-bin-${GLIBC_VERSION}.apk" \

&& rm "glibc-bin-${GLIBC_VERSION}.apk" \

&& wget "https://github.com/sgerrand/alpine-pkg-glibc/releases/download/${GLIBC_VERSION}/glibc-i18n-${GLIBC_VERSION}.apk" \

&& apk --no-cache add "glibc-i18n-${GLIBC_VERSION}.apk" \

&& rm "glibc-i18n-${GLIBC_VERSION}.apk"

RUN mkdir -p /usr/local/src \

&& cd /usr/local/src \

&& curl -L https://github.com/gohugoio/hugo/releases/download/v${HUGO_VERSION}/hugo_extended_${HUGO_VERSION}_linux-64bit.tar.gz | tar -xz \

&& mv hugo /usr/local/bin/hugo

COPY doc_site /doc_site

WORKDIR /doc_site

RUN /usr/local/bin/hugo

FROM nginx

COPY --from=hugo ./doc_site/public /usr/share/nginx/html

As you can see I am using a build container to generate the static pages. Using a Hugo theme for that worked really well to present the documentation!

Now that we have all the components, time to stitch it all together with a pipeline. I used Github actions for this demo, but you should be able to get it modded for other CI/CD tools.

Check it out here: https://github.com/dpb-infosec/socdocs/blob/master/.github/workflows/main.yml

Here’s a quick overview of the different steps in the pipeline:

- Check out the repository

- Install python

- Install python dependencies

- Run the script

- Build a docker container and push it to a repository (DockerHub in this case)

I have added to system rules that I modded to the template project, to show you how it looks in the end. You can see the end result by running the following command locally:

docker run -it -p 8080:80 dpbinfosec/socdocs

After that, just browse to http://127.0.0.1:8080/

Conclusion

And that’s it! From here on out you can run the container anywhere you want, be it a k8s cluster, or just a simple VM with docker support! I hope this can help out a few other SOCS that use QRadar. As always: feel free to send a PR, or ask if something is unclear. I’ll update the toolkit from time to time with additional helpful information. See you next month!

1166 Words

2020-09-21 00:00 +0000